Implementing AI in your application

January 24, 2026

The goal

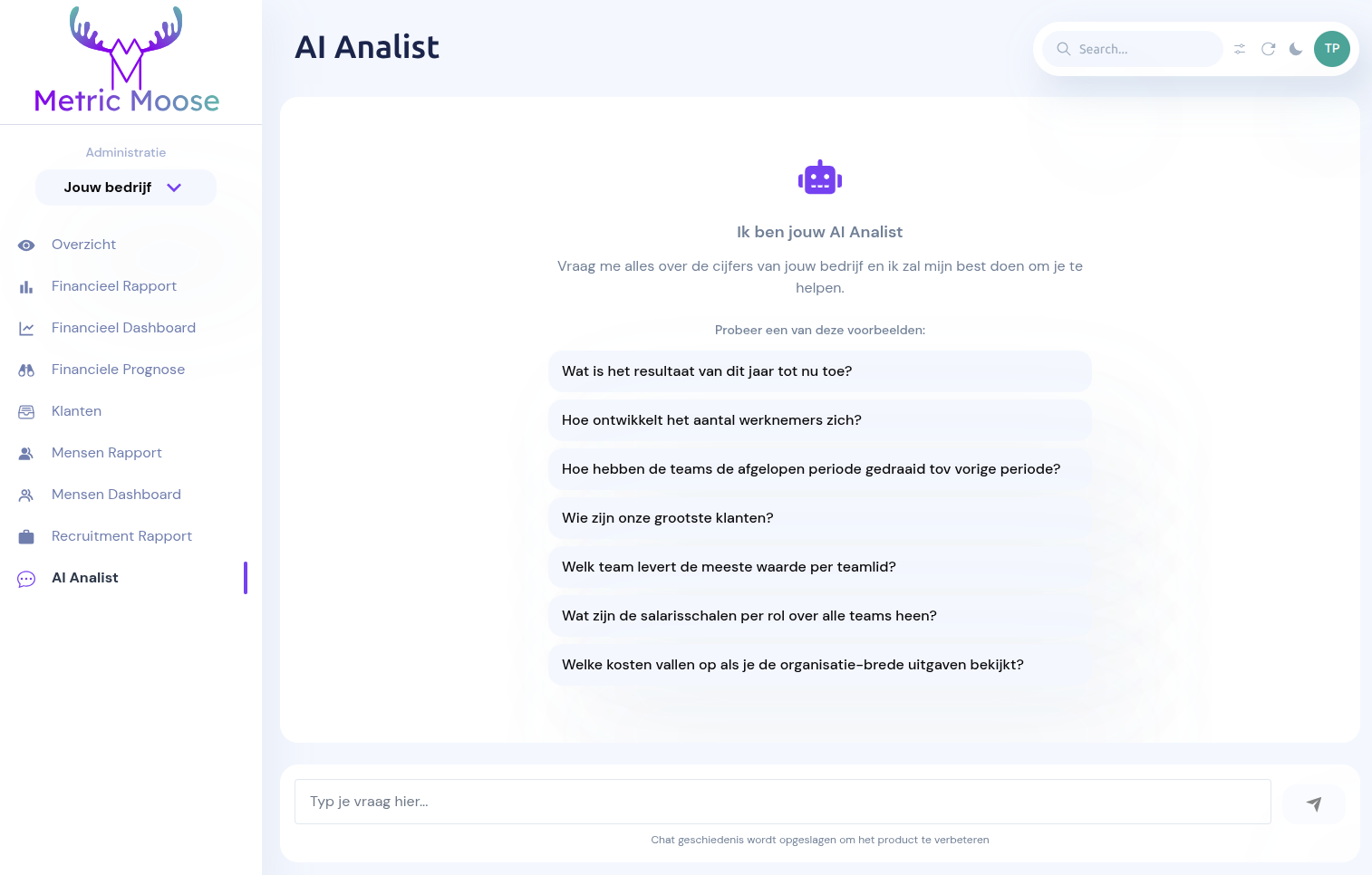

Metric Moose is all about data. It collects data from various sources, stores it, and provides insights through dashboards and alerts. The idea behind the AI Analyst feature is to leverage AI to analyze the collected data and provide deeper insights, identify patterns, and even predict future trends. This can help users make more informed decisions based on their data.

Lessons learned

Developing this AI-Analyst feature for Metric Moose has taught me a lot. Here are a few lessons learned that I’d like to share:

1. Use libraries

In the AI world, developments are moving so fast that it’s smart to work with libraries that handle the connection with different LLM providers for you. This provides a layer of abstraction, so you spend less time keeping up with technical developments and can focus more on functionality.

I’ve used parts of the AI SDK for this implementation.

2. Set up an automated test suite

Because you’re trying to control an LLM with textual instructions, it’s sometimes difficult to oversee what impact a change has. A small adjustment to the system instruction, for example, can yield an improvement for one prompt, but have an adverse effect on another.

To make this visible, I’ve set up a test suite that fires a set of common prompts in Metric Moose at the model and checks the results. This check can, for example, verify expected data in the response, or check for words you specifically don’t want to see (like “unfortunately” or “I cannot” 😉).

3. Store all conversations

This of course requires permission from your users or customers, but it’s enormously valuable to be able to read back real conversations with the AI model. For troubleshooting, you also want to be able to see which tools were called, with which parameters and with which results.

This way you can quickly discover new use cases and further fine-tune the model to improve the quality of the answers.

4. Privacy & Compliance

There’s a lot of discussion about how different AI providers handle data. Users often don’t like it when their data is shared with AI providers. Therefore, make sure you have everything properly arranged to comply with GDPR, and share as little data as possible.

For example: don’t let OpenAI store your conversations, but keep them yourself in your own database. Go through all retention settings carefully and set them to the minimum possible values. OpenAI also offers a Zero Data Retention option, which you might be eligible for.

Conclusion

💡 Building an AI feature is not just about technology; it’s also about product thinking, quality control, and taking responsibility for how data is handled.

Go back